Advancing life science by our mutual expertise

Let's do this together. You as a scientist, we as a sidekick.

Westburg is known to provide you with both supplies and knowledge in the field of life sciences. We share our know-how in this knowledge center in life science topics.

Life Science topics

Pick our brain and learn through resources, blogs, workflows, and comparison charts.

How to reduce cytotoxicity during cell transfection?

cytotoxicity refers to harmful events, how to reduce them.

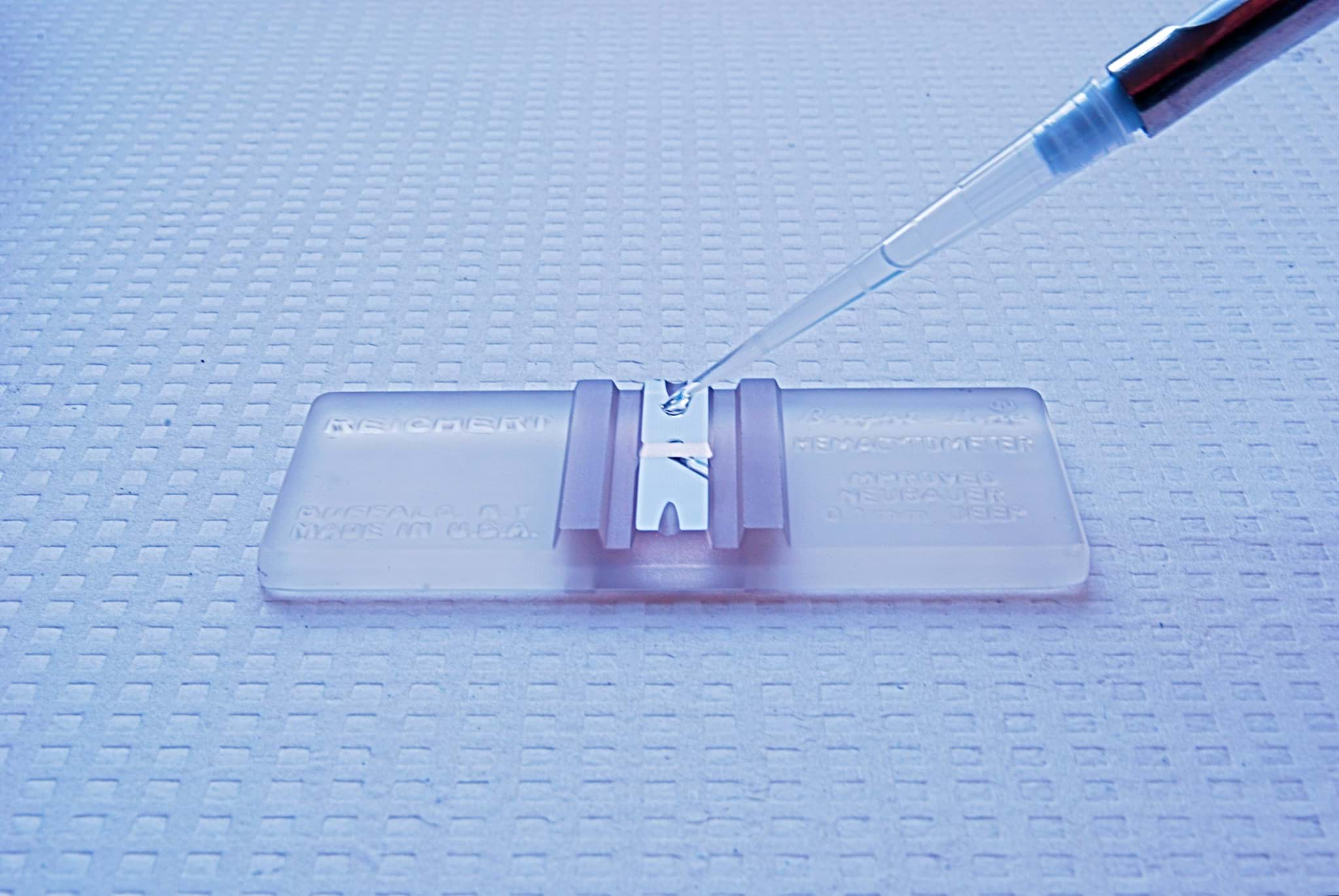

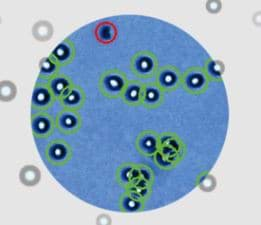

How to overcome cell counting stain toxicity?

Do you know Trypan blue is carcinogenic?

How to optimize qPCR in 9 steps?

The quantitative PCR workflow is straightforward, in 9 steps, this means:

5 ways you don't have to rely on a fluorescent microscopy expert ever again.

Discover how you get high-quality images without the need of an expert.

What type of PCR system are you looking for?

Ever wondered which system best suits your need?

Do you have a though time reproducing experiments?

Two-thirds of researchers have tried and failed reproducing results.

How to stop Mycoplasma from destroying your cell culture?

Don't underestimate the effect on cell culture. Prevent this problem today.

9 common manual cell counting problems and how to fix them

You never want to go back to manual cell counting, ever again.

5 reasons to switch to serum-free culture media

The serum-free media solution achieves ideal cell culture conditions.

What to look for in an automated cell counter?

How do you make the right choice?

What to do with precious contaminated cells in culture?

Which cell counter matches my needs?

Luna Family Cell Counters are trusted companions for many researchers.